Flicker Beyond Perception Limits - A Summary of Lesser Known Research Results

Since the introduction of fluorescent lamps, the lighting industry knows about flicker issues. However, many aspects are still unclear. Especially effects beyond the flicker fusion threshold are mostly ignored, and sometimes even denied. Dr. Walter Werner, CEO of Werner Consulting, gives a summary of research results indicating that further research is needed and that for high quality lighting very likely a much higher PWM modulation frequency is required.

For the most part, light flicker has not been a topic of conversation when talking about lighting except, perhaps, during the 1980’s when research was being done on fluorescent and discharge lamps. Simple solutions and electronic ballasts seemed to solve the issues then and the subject was forgotten again. However, LED lighting has changed the situation once more and light flicker is a very popular topic of conversation now, raising the questions of: Why should we care about flicker at all? What does flicker fusion really mean? Is there a safe threshold for flicker? Should we care about flicker beyond the visible experience? Do we understand flicker and do we know enough about its effects? Figure 1: Human vision has evolved and adapted to irregular and slow fluctuations in light intensity like what we get from sunlight filtering through the leaves of a tree or a campfire at night

Figure 1: Human vision has evolved and adapted to irregular and slow fluctuations in light intensity like what we get from sunlight filtering through the leaves of a tree or a campfire at night

A Short History of Flicker

Flicker in lighting is a relatively young phenomenon. Our biology has adapted to changes in the light provided by the sun, the moon, fire and candles. But all these changes are "slow" and "irregular" compared to the flicker created by technical effects. The same applies to the old thermal artificial light sources like gas light or incandescent electric lighting: The thermal inertia of the hot filament makes the changes in light output slow and with the mains frequency high enough, small and invisible. Only incandescent lamps on the 16.67 Hz circuits the railway system used in the early days of electrification in some areas had some visible flicker.

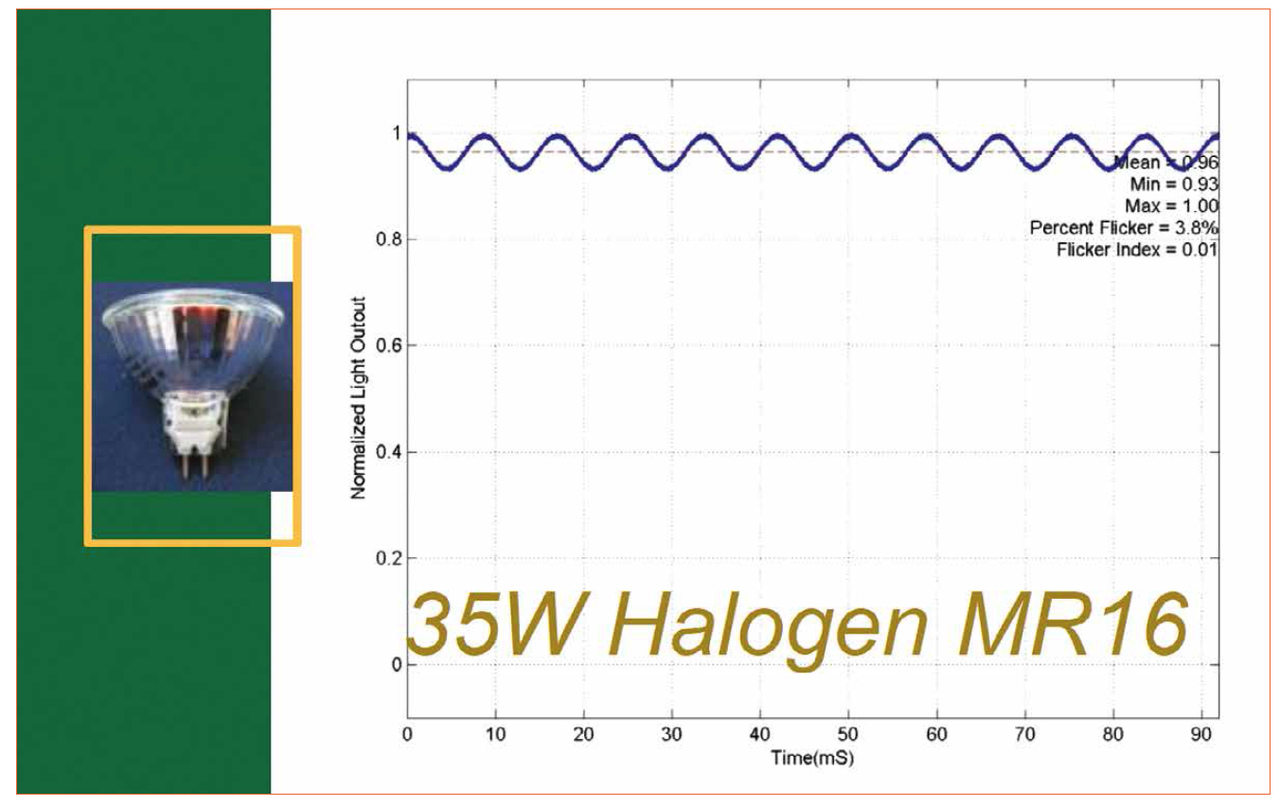

Figure 2: Even incandescent and halogen lamps flicker but virtually no flicker is visible due to them being an artificial light source at a grid frequency of 50-60 Hz (Credit: Naomi J. Miller, Brad Lehman; DoE & Pacific Northwest National Laboratory)

Figure 2: Even incandescent and halogen lamps flicker but virtually no flicker is visible due to them being an artificial light source at a grid frequency of 50-60 Hz (Credit: Naomi J. Miller, Brad Lehman; DoE & Pacific Northwest National Laboratory)

The first time flicker was an issue was with movies. The main question was how continuous movement could be created by a series of still frames shown rapidly one after the other. There are two aspects to this: How many frames per second are needed for a smooth impression, and how is it possible to avoid the flicker impression created by the short dark interval needed for the transport of the celluloid between the frames. Experiments showed that smooth movement impressions can be achieved with 15-18 frames per second, and a professional smooth impression at 24 frames per second (the number of frames needed directly affects the cost of a movie, therefore low frame rates are favored). But when those movies were shown to a wider community, some of the audience experienced severe health issues. When investigated further it was clear that the health issues were not connected to the content of the movies but rather the 18-24 Hz flicker was identified as the source of the problem. Today this is known as photosensitive epilepsy, a severe condition caused by flicker mainly in the range between 8 and 30 Hz, and decreasing above. To avoid the 8-30 Hz frequency range without tripling the cost of the films the movie industry tripled the shutter frequency and showed the same frame three times before moving to the next. Now they operate with flicker in the 60-80 Hz range which has been proved to circumvent photosensitive epilepsy. It also made the overall flicker impression that was experienced when not directly staring at the screen much better. Experiments showed that the flicker impression for most of the audience went away completely above approx. 120 Hz.

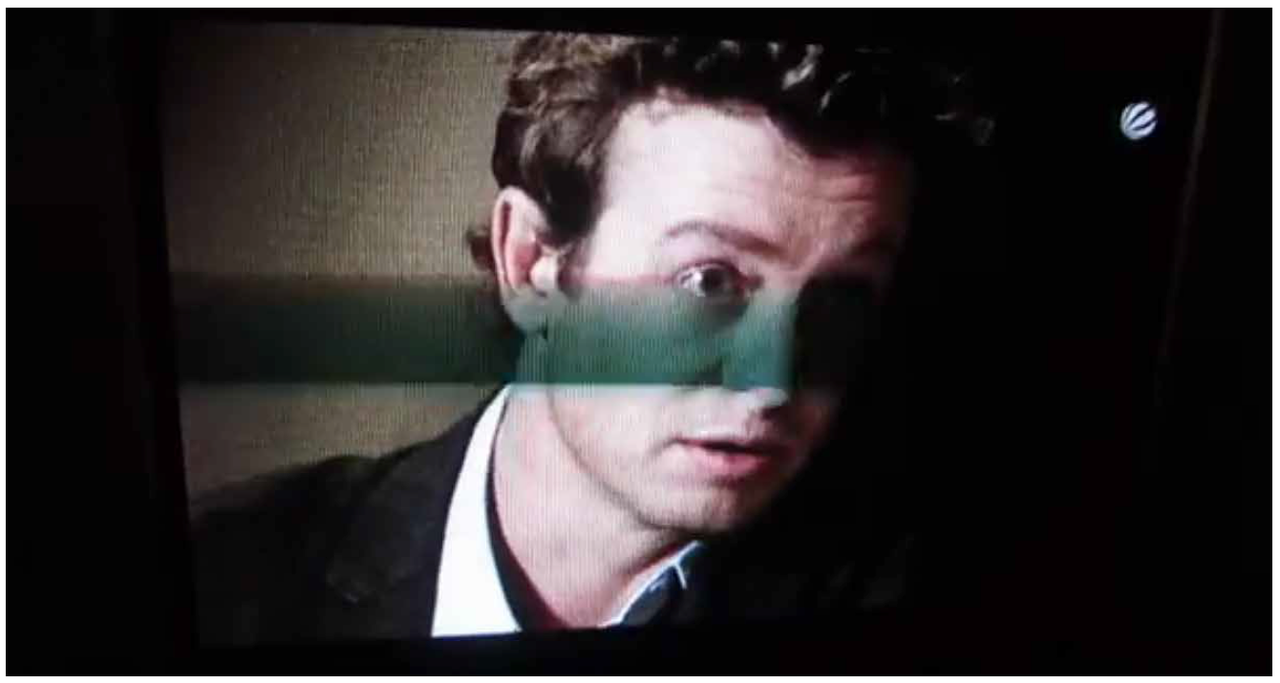

Figure 3: Photos from CRT TV monitors show the 25/50 Hz flicker as banding. An effect that appears very similar when taking pictures or videos using flickering LED lamps

Figure 3: Photos from CRT TV monitors show the 25/50 Hz flicker as banding. An effect that appears very similar when taking pictures or videos using flickering LED lamps

Flicker and Television

The development of the TV screen and the fluorescent lamp right after World-War-Two brought flicker back into focus and new attempts to understand flicker better were made.

To keep transmission bandwidth in a reasonable range, the TV screens could handle just 25 (Europe) or 30 (US) frames per second at a reasonable number of lines. To stay out of the flicker frequency range and get into a 50/60 Hz frame rate on the screen, broadcasting was organized in half-frames with interlacing lines: A really tricky way to get out of the flicker frequencies that caused photosensitive epilepsy. But in any case TV sets were known for the visual flicker impression they caused and in some areas they were called a "flicker box" (german: "Flimmerkiste").

The TV exploited the fusion issue to its maximum: It was actually a single spot that rapidly moved line by line over the screen, relying on the eyes to fuse it into a full image.

The flicker integration by the eye was believed to be caused by the bio-chemical process of the detection that has a substantial relaxation, the experience of a full screen view written by a fast moving single spot, supported this believe.

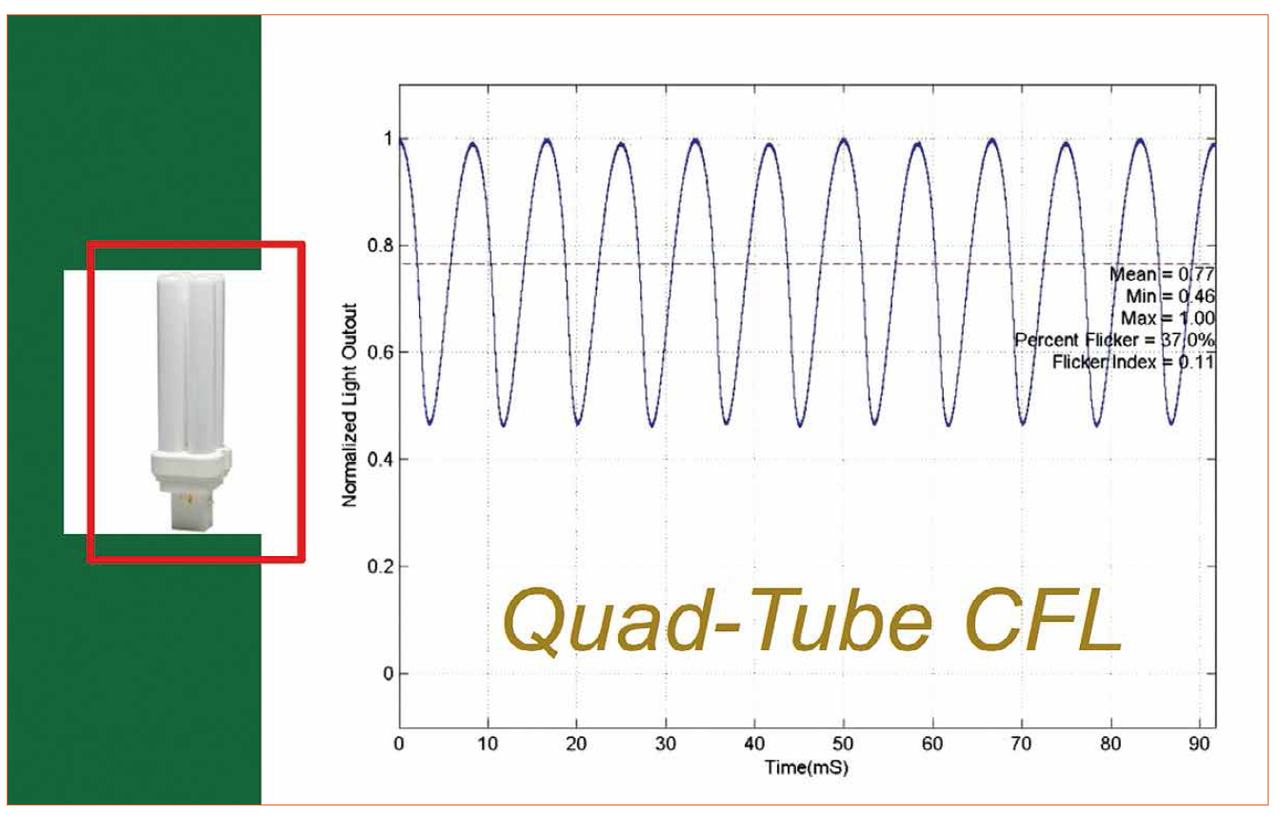

Figure 4: With the introduction of FL lamps and the use of magnetic ballasts, flicker issues became apparent and critical in lighting. The issues were solved by using multiple lamps operated on differently shifted phases (Credit: Naomi J. Miller, Brad Lehman; DoE & Pacific Northwest National Laboratory)

Figure 4: With the introduction of FL lamps and the use of magnetic ballasts, flicker issues became apparent and critical in lighting. The issues were solved by using multiple lamps operated on differently shifted phases (Credit: Naomi J. Miller, Brad Lehman; DoE & Pacific Northwest National Laboratory)

For photographers, the TV added a challenge that had not been known up until then: Making a "screenshot" with a reasonably short exposure delivered a single bright dot with a short tail and with longer exposures, a section of the screen stayed grey (there were no black screens in those days) instead of a nice picture. Also, TV or movie cam shots from TV screens ended up with vertical moving bars caused by interference if no special precautions were made.

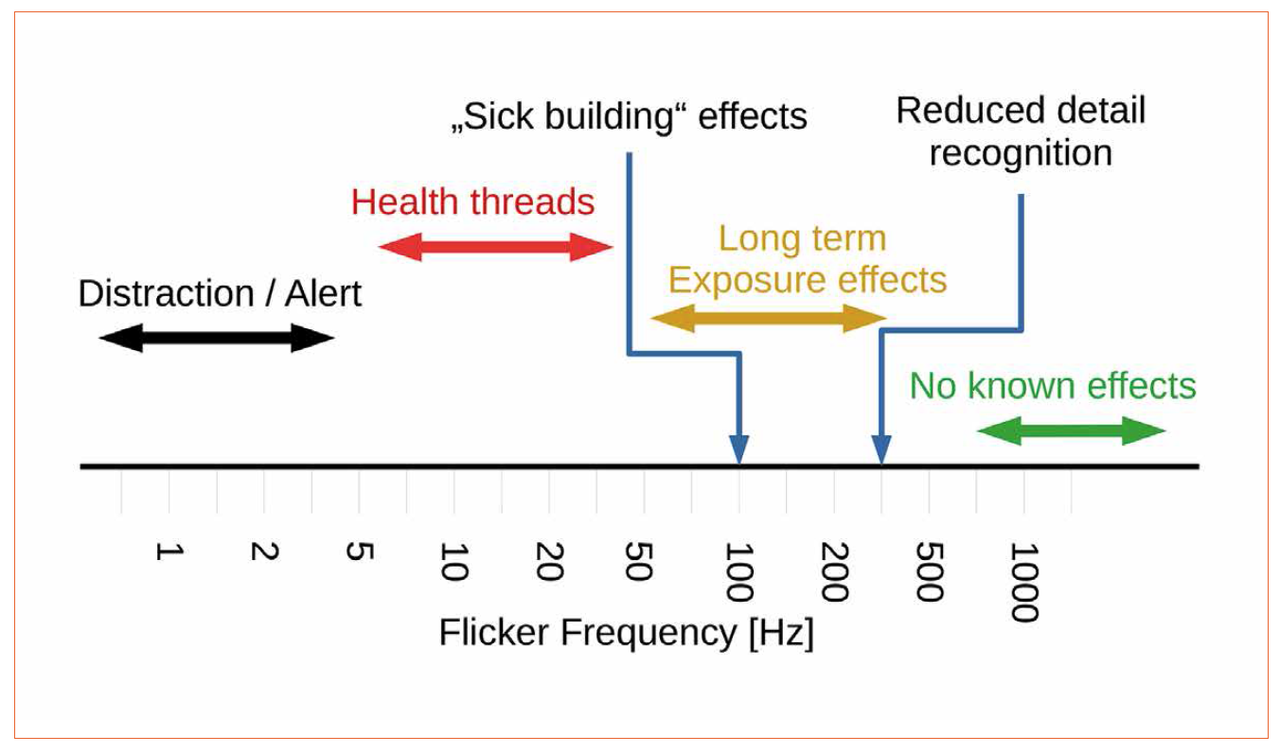

Figure 5: Various flicker frequencies have different effects on health or visual and cognitive performance

Figure 5: Various flicker frequencies have different effects on health or visual and cognitive performance

Flicker and Lighting

Like the TV exposed us to flicker in our living rooms, the fluorescent lamp took it to the work place. The flicker issues caused by the early fluorescent lamp were not experienced as an issue as in most applications it was substantially reduced by having multiple lamps per luminaire on differently shifted phases, substantially reducing the depth of the dark phase and adding to the flicker frequency which stayed well above 100 Hz.

Finally, in 3-phase connected environments with multiple lamps per luminaire and each lamp on either an inductive or capacitive ballast with the corresponding phase-shift, opaque white covered luminaires and little to no direction, the residual flicker in larger rooms (like production sports spaces) ended up above 600 Hz with a flicker depth of << 25% of the intensity! This was creating a practical kind of "no flicker" experience. The setting also allowed for TV shots without disturbing stroboscopic effects.

With the upcoming higher efficiency of the now thinner fluorescent lamps, the use of phosphors with lower relaxation time, and the highly directional anodized louvers where each lamp illuminated a specific area, the lighting industry started creating noticeable flicker without noticing it. Technically, a single fluorescent lamp creates flicker of 100 /120 Hz, and a full dark phase that covers some 25% of the time (the dark time depends very much on the line voltage applied).

With the flicker of discharge lamps in traffic lighting a new aspect of flicker was on the edge of being noticed: the stroboscopic effects of lights that pass by at higher angular speed.

In the mid 1980’s modern offices were found to make people sick in the long run (sick days rose, the number of respiratory infections increased, and there were a higher number of eye strain issues), and all kinds of root causes were thoroughly researched. Besides air flow issues, including wrong humidity management and exposure to germs by those early ventilation and heating systems, the "sick building syndrome" identified eye strain as being caused by long (multiple months/working hours) exposure to flicker at 100 Hz as being part of the issue [1].

This result was not smiled upon. In fact, many believed that the cause was poor lighting design, improper computer screens and the like. It was difficult to believe that there could be biological health effects when no flicker features were visible at all, especially with literature expounding the fact that the retinal cell was not able to follow that flicker frequency.

All the experience with older installations were in contradiction to that research, and in addition the lighting industry was accused of using influential arguments to push sales of the new "electronic ballast" technology that was able to dramatically reduce flicker. It is the destiny of most long-term-exposition-effects that they will be ignored for the long-term.

Bio-Medical Issues of Flicker

In the meantime, bio-medical research revealed additional features of the eye: besides the well visible movement that is caused by scanning the environment (looking around), a continuous and rapid but small movement was detected: The position of the eye jitters minimally around the actual focus of sight in all directions at an astonishing speed: It jitters at a rate of 80-100 position changes per second. (Individually different, and somehow rising with age).

This led to wide speculation and hefty discussions, especially as the movement was so fast that it outdid the fusion frequency, creating a contradiction: Why should the eye move faster than the receptors are able to create signals? At this time it was believed that the fusion frequency is caused by the limited response time of the retina cell. The full evolutionary reason for this effect, now called “ocular micro tremor (OMT)”, and the full bundle of purposes this bio-medical flicker generator possibly serves is still an object of research today.

In the meantime it became clear that the fusion frequency is caused by the brain handling the data stream, the eye apparatus has been shown to be able follow optical signals up to at least 200 Hz.

The linear fluorescent lamp moved away from flicker with the T5 lamp that was used on electronic circuits only. Quality vendors showed "residual ripple" figures in the single digit or below percentage range as a quality feature of their product. Contrary to that, the compact fluorescent lamps brought flicker back to lighting, especially to the home environment, with the deployment (and finally legal push) of the "energy saver" replacement lamps that were also for private use, where designers cared more about the cost and less about the light quality achieved. Most of them feature substantial flicker at double the mains frequency.

With the high-pressure discharge lamps used in street lighting, flicker was reduced as the lamp also emits a substantial portion of light during the change of polarity.

The flicker of TV sets and computer screens was attacked by the quality industry when it was understood that it is connected to eye strain related health issues. TFT screen technology, fast micro- and display controllers together with cheap RAM technology allowed for low flicker at higher frequencies with the screens (e.g. "100 Hz TV" was a quality sales argument for a while).

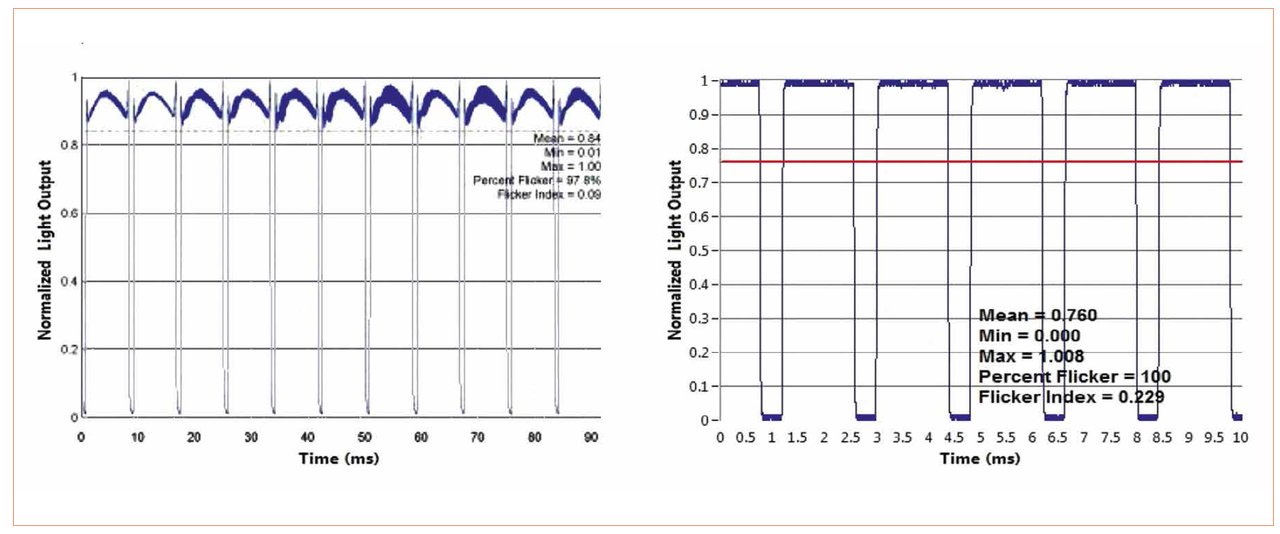

Figure 6: With LEDs inertia in the light emission, poorly designed drivers (left) and PWM dimming (right: e.g. on approx. 80% level) brought flicker back to lighting (Credit: Naomi J. Miller, Brad Lehman; DoE & Pacific Northwest National Laboratory)

Figure 6: With LEDs inertia in the light emission, poorly designed drivers (left) and PWM dimming (right: e.g. on approx. 80% level) brought flicker back to lighting (Credit: Naomi J. Miller, Brad Lehman; DoE & Pacific Northwest National Laboratory)

The Return of the Flicker

When LED's first came into TV sets they were usually driven by some internal circuitry with no need for dimming, and therefore no flicker was applied. But when the LED's came into lighting, dimming and color change (by dimming the color components relatively) became easily accessible, and the PWM technology mainly used to dim brought flicker back into the main area of lighting. The LED follows the electric current applied without any inertia in the light emission. As higher PWM frequencies cause higher cost and losses, flicker at comparatively low frequencies was back, the "flicker knowledge" of the early 1990’s and the drive to avoid flicker above the photopic epilepsy range in the lighting environment was not accessible (or less prioritized) to the new generation of engineers providing the driver circuits for a new technology. This went unnoticed until the first camera shots of a PWM lit environment caused troubles, leading (history repeats itself) to special equipment for medical and sports lighting and areas where high end camera application (especially moving camera application) was a requirement.

Now, again, the flicker discussion ended up in "120 Hz is acceptable", as, for example, George Zisses showed in his excellent article in LpR 53, Jan/Feb 2016, based on published, commonly accepted and applicable standards. Doing so gets the flicker out of the well perceivable range for most of the individuals. However, the main research and argumentation, not just in this article, is focused on visible perception, ignoring the known facts about OMT and the eyestrain health issues connected to long-term exposure to flickering light.

Most of the used material is based on the implicit assumption that what cannot be perceived should not cause any harm. Science needed to readjust more than once from an assumption of this kind, just look at x-rays and radioactivity.

With lighting, the assumption made by the standards sounds correct in the first place, but this is possibly not sufficient for responsible persons and organizations:

- There is no proof available that flicker above the said 120 Hz is harmless

- Only a little research is available concerning long term effects, and what is available was performed specifically with fluorescent lights, that have a totally different, and possibly less harmful, flicker characteristics than today's LED lighting uses

Poor Research Coverage and Poor Results Reception

Regarding the poor research coverage of long term effects, there is one prominent investigation concerning mid to longer term effects available, that was part of the sick building research in the late 1980’s, conducted by Wilkinson et al. It was focused on 100 Hz modulation [1]. It showed that a switch from a longer exposure (multiple month) with substantially modulated light (60% modulation) to low modulated light (6% @100 Hz) reduces headaches and eyestrain immediately (within a few weeks). These results are statistically significant. Most of the lighting industry ignored the results, as the opposite effect (increased eyestrain and headaches), could not be shown within four weeks of exposure that the research campaign allowed for.

On top of the limited knowledge that eases the notion "there is no effect where there is no proof of an effect", there are some hints that flicker with higher frequencies has immediate effects on some embedded mechanisms of our eye.

Hints of Flicker Effects on Visual Performance:

- The focusing of the eye changes slightly with the frequency applied up to 300 Hz [4]

- The ability to separate fine structures is reduced with flicker up to above 300 [3]

- The visual nerve follows intensity frequencies applied up to 200 Hz

- Transitional effects of flicker have been claimed to be detectable up to 800 Hz

This seems to be very high given the bio-chemical nature of the sensors, and the relatively low fusion frequency of our visual system. This raises the question of if there is any plausibility or understanding how a non-visible modulation of higher frequencies may interfere with our perception system.

Therefore it is necessary to understand our visual system that is following a layered approach. The retina cells deliver the actual "reading" to a first layer of neuronal structure, the results are delivered to a second layer, and the overall result is then passed to the brain. Now doing a little speculative work, one could expect that OMT serves a minimum of two purposes.

Two possible purposes of OMT:

- Cross-adjusting the attenuation of the cells by scanning over the same spatial position with two adjacent cells

- Adding to the resolution by covering the space between the cells, e.g. scanning for the exact position of a transition

The trouble is that both rely on a short-term constant intensity of the light source. The relative calibration fails if the reference source changes when switching between the cells, and the detected sharp transition during the movement is superimposed by a light source that does sharp transitions by purpose. So there is a possible conflict, but it is complex in nature and the research on this has not yet developed very far.

Searching the Safe Side

The simple question of where the edge frequency is, leads to a difficult and multidimensional answer, and possibly needs to be split further regarding the different types of retina cells.

Basic Conditions:

- The edge frequency of the retina cell could well be as high as 800 Hz or slightly above (that is the maximum that has been claimed to be visible in experiments)

- The receptors in our retina never work alone; they are always networked within a complex neuronal setting. So maybe the actual edge frequency is higher but basic network layer stops faster signals from propagating to the optic nerve: the edge frequency of the signals delivered to the optic nerve seems to be in the 200 Hz range, but most likely all effects above 120 Hz are locked by the underlying network from being propagated to the optic nerve

- The edge frequency for the visual cortex of the brain seems to be around 25 Hz, called fusion frequency

- The edge frequency for other parts of the brain affected by the signals from the optic nerve seems to be at or below 30 Hz, the threshold for photosensitive epilepsy

This is again proof that the eye is a fantastic optical instrument, and uses various technologies to enhance the view (some of these technologies can also backfire when it comes to visual illusions, but this is a different story).

Figure 7: More stringent flicker regulations are already in discussion. At Lightfair 2015, Naomi J. Miller and Brad Lehman showed in their lecture "FLICKER: Understanding the New IEEE Recommended Practice" some applications that are recognized to being very critical. However, research results that allow to determine clear limits for being on the safe side are still missing

Figure 7: More stringent flicker regulations are already in discussion. At Lightfair 2015, Naomi J. Miller and Brad Lehman showed in their lecture "FLICKER: Understanding the New IEEE Recommended Practice" some applications that are recognized to being very critical. However, research results that allow to determine clear limits for being on the safe side are still missing

Two example tasks the eye performs in an astonishing way:

- Humans are able to resolve structures that are in (and seem to be somehow below) the range of the distance of the receptor cells: The performance is better than the pixilation caused by the receptor cell array imposes. This could well be related to enhancement using the micro-tremor

- Humans are able to detect structures that are based on very low luminosity differences. This is possible only if neighborhood receptor cells are exactly calibrated (and continuously recalibrated) against each other, a difficult task for biochemical photoreceptors, and also a difficult task for technical cameras: Luminosity transition structures on the edge of visibility need really advanced apparatus to be able to photograph them

Both achievements need (relatively) steady lighting situations during the scan. Changing light affects the scan results. The higher the modulation, the more they are jeopardized.

Humans are used to "looking closely" to perform specific tasks, e.g. resolving fine structures or close-to-nothing luminosity transitions, and that technically translates to concentrating on the point, or in other words, allowing for a longer integration time of the sensor results to get rid of sensor noise, etc. But modulated light, and especially deep modulation will ruin the attempts to get reasonable results out of the OMT enhancement, and may well be a source of stress to the eye and to the brain, especially when applied for a longer time or with difficult visual tasks.

Research in the Project Prakash on blind persons that regained their sight as adults [2] showed, that the visual apparatus and object recognition ability also adopts to a quite normal view after a while as adults, but does not gain some of the more advanced abilities. This could point to the fact, that the complex analysis ability shown above is a trained one, acquired during childhood.

Conclusions & Prospects

Short term exposure to higher frequency flicker seems to be no trouble for adults, as long as no advanced visual tasks need to be performed. There are no suggestions that frequencies above 800 Hz affect humans, but there is enough evidence that flicker up to 400 Hz is not harmless with long-term exposure. The results suggest strongly that there are negative effects like stress or wear-out to the visual apparatus. HD movie Cameras (e.g. as found in high end smartphones) show severe interference issues with flickering light at lower frequencies, very much like the old TV screens had. Pets and especially birds may suffer from flicker that is not visible to humans, but this is a different issue.

While research cannot give clear evidence about safe lower thresholds, longer term exposure to higher frequency flicker should be avoided, and especially wherever (younger) children stay for longer periods of time to make sure the possibility of interference with their later visual abilities is minimized. To stay safe, responsible manufacturers should avoid flicker below at least 400Hz for lighting that is intended to be used in offices, working zones, baby and children’s rooms, kindergarten installations and screen illumination of children’s toys.

The existing research is poor and many aspects of flicker are still not clear today, such as the influence of the flicker shape. More research is definitely needed to understand where the safe zone really is.

References:

[1] Wilkins A.J., I.M. Nimmo-Smith, A. Slater and L. Bedocs: Fluorescent lighting, headaches and eye-strain, Lighting Research and Technology, 21(1), 11-18, 1989

[2] Pawan Sinha: Es werde Licht, Spektrum der Wissenschaft 18.7.2014 (partly based on Held, R. et al: The Newly Sighted Fail to Match Seen with Felt, Nature Neuroscience 14, p 551-553, 2011)

[3] Lindner, Heinrich: Untersuchungen zur zeitlichen Gleichmässigkeit der Beleuchtung unter besonderer Berücksichtigung von Lichtwelligkeit, Flimmerempfindlichkeit und Sehbeschwerden bei Beleuchtung mit Gasentladungslampen, 1989, Thesis, TU Ilmenau, Germany

[4] Jaschinski, W.: Belastungen des Sehorgans bei Bildschirmarbeit aus physiologischer Sicht. (1996) Optometrie, 2, S. 60-67