Voice Controlled Lighting that Protects Privacy and Data

LpR 71 Article - page 54: Intelligent lighting systems undoubtedly offer high comfort and energy efficiency. But especially conventional voice control systems like Amazon Alexa, Apple Siri or OK Google, with connected lamps and lighting fixtures are also becoming targets for uninvited guests. The benefits and technology of a new approach offering local voice control and artificial intelligence will be explained by Genia Shipova, Director Global Communications at Snips.

Intelligent lighting systems undoubtedly offer high comfort and energy efficiency. But connected lamps and lighting fixtures are also becoming targets for uninvited guests. Like many connected devices, the smart lights potentially open a gateway for cyber-attacks or a backdoor to information that should better remain within users' homes or offices. The problem becomes even more pressing when lights are not only 'smart' but also voice-controlled, since voice data is a biometric identifier and is as unique to users as their fingerprints. As more homes and offices are connected with voice-controlled lighting installations, the threat of breaches only increases. Yet this does not have to be the case. Lighting can also be controlled locally, on-device using voice and in compliance with European regulations and while protecting privacy.

With the enforcement of the European General Data Protection Regulation (GDPR) coming into effect this year, security and privacy must also be taken into consideration for intelligent lighting devices. Proactive measures are required to avert damage to companies and their customers. While the introduction of voice-controlled lighting undoubtedly offers new experiences for end users, companies must take proactive steps to protect their customers in the wake of this new legislation.

Companies have traditionally approached adding voice to products by leveraging cloud servers to perform machine learning and process voice data. This offered high-performance voice recognition but is potentially jeopardizing security and privacy.

Process Speech Locally

The AI voice specialist Snips, is committed to protecting privacy when using voice to control lights, lighting fixtures and many other connected devices. The company's approach is impressively simple, but the solution is anything but trivial because the necessary know-how is based on the use of mechanisms of artificial intelligence and machine learning.

The voice interface is based on the idea of completely local language processing and control. This means that the private user data never leaves the local installation. Accordingly, the system is private by design and compliant with even the strictest data protection regulation.

This approach has significant implications for smart lighting companies. First of all, there is the question of effort: Why should the cloud be used to manage such a simple procedure as switching on the light? Secondly, there is the question of accessibility: why should switching on or dimming the light depend on whether the Internet is available? If the Internet fails or the router is defective, customers could literally be caught in the dark.

Simple requirements call for simple solutions. Switching on the light is based on very few voice commands. There is no need to send a command like "turn on the light" or "dim the lights in the kitchen by 30%" to the cloud for processing. OEMs can also embed voice interfaces on microcontrollers that work offline and are also tailored to the product and brand. This is both cost-efficient and helps to calculate profitability because no costs are incurred for the actual use.

Embedded Solutions for OEMs

Companies like Alexa and Google force the OEMs who integrate with their platforms to sacrifice a degree of their brand identity and customer relationships. For lighting OEMs, utilizing an embedded voice platform allows them to decouple their offers from aggressive third parties who might market competing offers or release their own offer and take more control of their customer's voice experience.

The architecture is designed to enable OEMs to design and build customized voice solutions by manufacturers of virtually any device, but can also be easily used by developers and makers. For this purpose, parts of the technology stack are open to the public, making it possible for everybody to set up a low-cost voice-controlled lighting system.

Selecting the Fitting Hardware

The hardware set-up to create a functional solution depends on the level of sophistication of the intended use case. It is important to understand that all hardware components must be matched in their performance to each other in order to enable the desired functionality. This is, at the end of the day, a question of finding a consistent set-up to balance the intended level of functionality, comfort and pricing.

The choice of the appropriate microphone, for example, shows the complexity combined with the development of merchantable products that meet the customers' expectations. Many of the devices used for voice control purposes (e.g. home assistants, speakers, entertainment hubs, coffee machines, lighting control) typically sit somewhere in a room, at a certain distance away from the speaker. And just like the remote control allows to switch TV channel while staying in the couch, it is important to enable voice-controlled devices to understand what is said without having to walk up close to them and start shouting. Furthermore, we don't want the devices to start triggering commands when we are not explicitly asking for them to do so, so we expect some tolerance to noise, music or conversations others might be having in the room.

Summing up, a good microphone for that purposes would:

• Aallow the user to speak anywhere in the room, that is, from long

distances, and from any angle

• Be resistant to various kinds of noise (music, conversations,

random sounds)

Finding a microphone matching these criteria is a bit of a challenge. Cheap, generic USB microphones turn out to be unsuited for the task. They only capture sound coming from up close, and from a specific direction. The microphone arrays themselves do not solve the problem of understanding what we are saying to the devices, but they certainly are an essential component.

Many of today's voice-enabled devices work in the following way: they remain passive until the user pronounces a special wake word, or hotword, such as "Alexa", "OK Google", "Hey Snips", which tells the device to start listening carefully for what the user is saying. Once in active listening mode, it attempts to transcribe the audio signal into text — performing so called Automatic Speech Recognition [1], or ASR for short — , the goal being to subsequently understand what the user is asking for, and act accordingly.

Hotword Detection and Automatic Speech Recognition

Hotword detection and ASR are two distinct problems, and they are usually treated independently. Good hotword detection software must have high recall and high precision: it should always detect a hotword when it is spoken, and it should absolutely not detect a hotword when it has not been spoken. Indeed, we don't want to have to repeat ourselves when triggering an interaction, and we don't want the device to start listening when it hasn't been asked to.

Similarly, the ASR processes the audio signal and translates it into the corresponding words. A spoken sentence is nothing more than a sequence of phonemes [2]. Therefore, a fair bit of the complexity lies in capturing a reasonably good audio signal so that the ASR can differentiate between phonemes and construct the most accurate text sentence. If noise levels are too high and the audio saturates, the ASR could misunderstand a word.

There are various factors which affect the quality of hotword detection and ASR. Some words are simply not suitable as hotwords, for instance if they are difficult to pronounce ("rural") and hence difficult to detect, or if they are too close to words you would commonly use ("hey"), as they would constantly trigger the device when you are having a conversation nearby. Good acoustic models are trained so as to be robust to ambient noise, sound levels, variation in pronunciations and more. However, if the microphone can consistently provide a clean audio signal regardless of the situation, it will drastically improve the performance of hotword detection and ASR, and hence of the end user experience. That is why microphone arrays usually feature a dedicated chip, a Digital Signal Processor (DSP), for performing things like noise reduction, echo cancellation and beamforming.

The example shows the interdependencies between the quality used hardware components and the desired comfort and performance of the whole solution. Objectively benchmarking microphone arrays is not straightforward, as results will of course depend on the underlying acoustic models.

Complex Language Training

Although the majority of voice-controlled lighting requirements may be limited for the moment, this approach is still at the forefront of voice adoption and additional use cases will develop over time. For example, voice-controlled lighting setups shouldn't just control lighting in one room, but rather in the entire house or office.

Most cloud-based voice platforms introduce additional use cases by analyzing how people are using their assistants with machine learning. Yet this often ends up delivering an inferior user experience that is often referred to as the "cold start" problem. Essentially, voice assistants frequently aren't very good right away when a user turns them on. This problem is solved by performing machine learning before it gets in the hands of the user. Therefore, it is inevitable to working together with the respective manufacturers to train their assistants directly to a use case and brand.

The Ecosystem

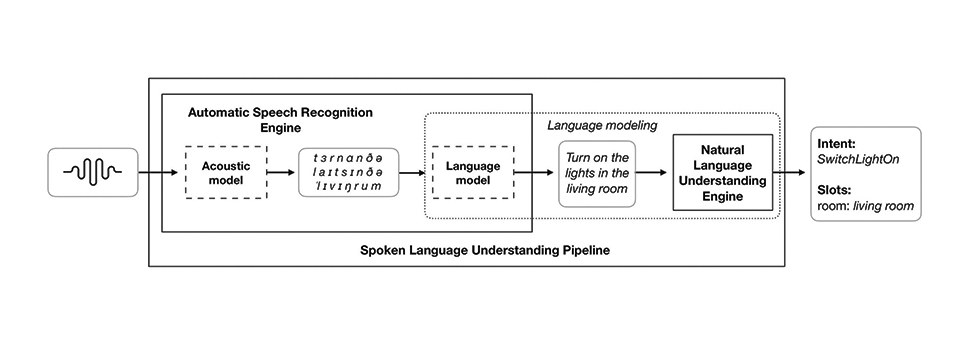

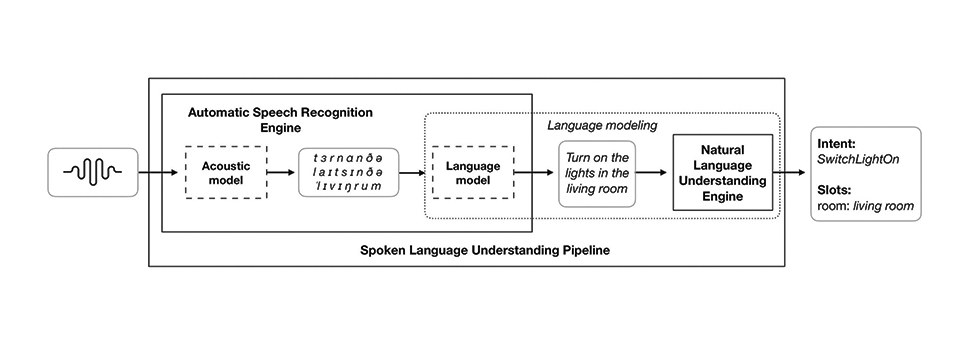

The ecosystem comprises a web console to build voice assistants and train the corresponding Spoken Language Understanding (SLU) engine, made of an Automatic Speech Recognition (ASR) engine and a Natural Language Understanding (NLU) engine. The console can be used as a self-service development environment by businesses or individuals, or through professional services.

The Platform is free for non-commercial use. Since its launch in the summer of 2017 over 23,000 voice assistants have been created by over 13,000 developers. The currently supported languages are English, French and German, with additional NLU support for Spanish and Korean. More languages are added regularly.

An assistant is composed of a set of skills – e.g. SmartLights, SmartThermostat, or SmartOven skills for a SmartHome assistant – that may be either selected from preexisting ones in a skill store or created from scratch on the web console. A given skill may contain several intents, or user intention – e.g. SwitchLightOn and SwitchLightOff for a SmartLights skill. Finally, a given intent is bound to a list of entities that must be extracted from the user's query – e.g. room for the SwitchLightOn intent. When a user speaks to the assistant, the SLU engine trained on the different skills will handle the request by successively converting speech into text, classifying the user's intent, and extracting the relevant slots.

Once the user's request has been processed and based on the information that has been extracted from the query and fed to the device, a dialog management component is responsible for providing a feedback to the user, or performing an action. It may take multiple forms, such as an audio response via speech synthesis or a direct action on a connected device – e.g. actually turning on the lights for a SmartLights skill. Figure 1 illustrates the typical interaction flow.

Figure 1: Interaction flow

Figure 1: Interaction flow

Language Modeling

The language modeling component of the platform is responsible for the extraction of the intent and slots from the output of the acoustic model. This component is made up of two closely-interacting parts. The first is the language model (LM), that turns the predictions of the acoustic model into likely sentences, considering the probability of co-occurrence of words. The second is the Natural Language Understanding (NLU) model, that extracts intent and slots from the prediction of the Automatic Speech Recognition (ASR) engine.

Figure 2: Spoken language understanding pipeline

Figure 2: Spoken language understanding pipeline

In typical commercial large vocabulary speech recognition systems, the LM component is usually the largest in size, and can take terabytes of storage. Indeed, to account for the high variability of general spoken language, large vocabulary language models need to be trained on very large text corpora. The size of these models also has an impact on decoding performance: the search space of the ASR is expanded, making speech recognition harder and more computationally demanding. Additionally, the performance of an ASR engine on a given domain will strongly depend on the perplexity of its LM on queries from this domain, making the choice of the training text corpus critical. This question is sometimes addressed through massive use of users' private data.

One option to overcome these challenges is to specialize the language model of the assistant to a certain domain, e.g. by restricting its vocabulary as well as the variety of the queries it should model. In fact, while the performance of an ASR engine alone can be measured using e.g. the word error rate, Snips assess the performance of the SLU system through its end-to-end, speech-to-meaning accuracy, i.e. its ability to correctly predict the intent and slots of a spoken utterance. As a consequence, it is sufficient for the LM to correctly model the sentences that are in the domain that the NLU supports.

Conclusion

The system is well prepared for future tasks and probably the key element for this is the design of the language model: The size of the language model is thus greatly reduced, and the decoding speed increases. The resulting ASR is particularly robust within the use case, with an accuracy unreachable under the hardware constraints for an all-purpose, general ASR model. This design principle makes it possible to run this SLU component efficiently on small devices with high accuracy as requested for e.g. for smart lighting use cases.

Figure 3: The processor capacity is defined by the intended use case

Figure 3: The processor capacity is defined by the intended use case

References:

[1] Automatic Speech Recognition: https://en.wikipedia.org/wiki/Speech_recognition

[2] Phonemes: https://en.wikipedia.org/wiki/Phoneme

[3] Digital Signal Processor (DSP): https://en.wikipedia.org/wiki/Digital_signal_processor

[4] Beamforming: https://en.wikipedia.org/wiki/Beamforming